Google and Covestro push the boundaries of near-term quantum computing

For the past three years, dedicated teams of quantum computing specialists from Google and Covestro have been working together, exploring quantum computing for industrial chemistry research. Beyond deep technical expertise and attention to detail, this work requires openness to unexpected solutions, honesty and trust between the partners. These are values that work, as we see in fruitful results of our collaboration.

Our most recent study, published in Nature physics, describes how simulation results obtained with noisy, pre-error corrected quantum computers can be systematically improved towards the desired noise-free answer. The work frames the possibility of using the current generation of quantum computers for chemical simulation.

Purifying noisy quantum computations

The simulation of quantum mechanical systems is the most promising early application of quantum computing. However, for the industrially relevant case of molecules undergoing a chemical reaction, the noise present in today’s pre-error corrected quantum computers poses a severe challenge. This is because quantities of interest, such as the rate at which a reaction happens, are governed by minute differences between large electronic ground state energies. This sets a high bar for the required accuracy of any simulation. Error rates in near-term devices are sufficiently high to stymie efforts to reach this bar, unless errors can be successfully mitigated.

The question of whether sufficiently effective ways can be found to mitigate the effects of noise without having to wait for large-scale quantum error correction is a make-or-break issue for near-term applications in chemistry. That’s why we focused on this question in our latest paper now published in Nature Physics.

This new paper studied two so-called `purification-based’ error mitigation techniques, that were developed previously at Google, but not tested on hardware in the real world. It was decided to test them on algorithms for chemistry developed previously by Covestro and Qu & Co (now part of PASQAL). We wanted to set up a computation that would be close enough to the kind of computation one would want to do to solve real world problems in chemistry, but still be sufficiently simple to investigate the effectiveness of different error mitigation methods.

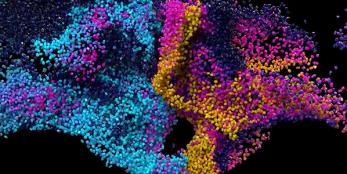

To set up such a computation, the team built off prior work from Covestro and Qu & Co (now part of PASQAL) in which electron correlations are taken into account but the electrons are described as coupled in pairs. This makes our new work a natural step forward from previous Google experiments simulating Hartree-Fock on a quantum device, a less precise method that can be executed efficiently also on a classical computer. Curiously, we figured out, that this single step is sufficient to make exact classical simulation as challenging as a general quantum computation. This also brought to the fore complexities that the prior Google experiment could sidestep, making this far closer in practice to an eventual beyond-classical simulation.

Hundredfold decrease in error achieved

We found the purification based error mitigation techniques to be very effective under the real noise of the device. A decrease in error of up to a hundredfold could be achieved. Even better, this error suppression appears able to cope with or perform better at larger computations. This type of scalability is crucial because larger computations present more chances for the noise to impact the outcome. These are extremely encouraging results, showing that an essential ingredient for pre-error corrected quantum advantage actually works, especially since the tested methods are “online” methods that seamlessly react to changes in the strength and type of error happening on the device and they actually mitigate the errors on the device rather than just extrapolating.

This error suppression however comes at the cost of having to run the quantum circuits more often than would be needed on a perfect device. Even an ideal quantum computer usually does not provide the desired answer after a single circuit execution, but multiple repetitions are needed to obtain the sought after quantity as an average over many measurements.

Our new paper also sheds light on this issue. For the first time purification-based quantum error mitigation techniques were systematically tested on hardware over different system sizes. While the error suppression holds up or improves, the number of repetitions needed to obtain these precise results also grows rapidly, to the point where this quickly becomes the limiting factor. Even though this makes it possible to obtain precise results for computations were this was not previously possible it also means that one must wait longer for these results.

While our outlook on error mitigation is relatively positive, the path from these results to a beyond-classical simulation of chemistry using variational methods is more unclear. Significant theoretical and experimental advances are needed to make this practical, and it is unclear that these will be achieved before the advent of fault-tolerance. To achieve useful near-term quantum computation, a paradigm shift to different methods or different applications may be needed.

Thus, fundamental research questions remain to be answered before Feynman’s dream of using quantum computers to simulate quantum systems can become industrially relevant. Google and Covestro are committed to continuing joint research towards this goal.